Tag: Delivery

-

Prioritisation

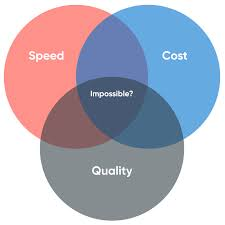

How do we get Senior Leaders onboard? For the last 8 months I’ve been busy running Product Management training for various Public Sector clients; and one topic cohorts are always keen to focus on and discuss is prioritisation. Quite often there are multiple areas of prioritisation people are keen to understand further; firstly, how can…

-

The importance of having a Product Mindset.

This week is @DWPDigtials Product Mindset week; with some fantastic sessions and Digital Showcases on the work DWP have been doing to deliver Products that add value for users; with sessions covering things like storytelling, Design thinking, Product Management, Prioritisation and how we should be using data to help decision making, etc. The resounding…

-

Empowering your Teams

How to create the culture needed for success One discussion that comes up regularly when I’m running Product Management Training courses, or coaching new Delivery Teams; is how they can encourage their leaders to empower them, and trust their decisions more. Whether it’s having their leaders back their decisions when something has gone wrong/ or…

-

Being Product Led

Helping organisations move from ‘doing Product Management’ to ‘being Product led’. As you might expect from someone who’s career has been within the Product space, and who has worn various ‘Head of Product’ types hats over the years; I spend a lot of thinking and talking about Product Management. For the last few month’s I’ve…

-

Encouraging join up across Departments.

Yesterday at GovCampNorth, there were multiple pitches all based on one common theme; how we can encourage and support collaboration and sharing across Government Departments. The reason given behind increasing this collaboration was varied; from improving opportunities for re-use and the sharing of best practices; to the opportunity to tackle and solve societal problems that…

-

Onwards and Upwards

Today I say goodbye to my work with Kainos, and begin my new role as a Director at Deloitte Digital. I’ve really enjoyed the last 18 months with Kainos; and the opportunity it gave me to step back and focus on delivering ”A thing” after I was left feeling rather burnt out and exhausted by…

-

How do we make legacy transformation cool again?

Guest blog first published in #TechUk’s Public Sector week here on the 24th of June 2022. Legacy Transformation is one of those phrases; you hear it and just… sigh. It conjures up images of creaking tech stacks and migration plans that are more complex and lasting longer than your last relationship. Within the Public Sector,…

-

Maximising the Lean Agility approach in the Public Sector

First published on the 26th June 2022 as part of #TechUk’s Public Sector week here ; co-authored by Matt Thomas. We are living in a time of change, characterised by uncertainty. Adapting quickly has never been more important than today, and for organisations, this often means embracing and fully leveraging the potential of digital tools.…

-

Becoming Product Led

Recently I was asked how I would go about moving an organisation to being Product Led; when agile and user centric design are equally new to the company, or when agile has not delivered in the way that was expected. Before diving into the how, I think it’s worth first considering the what and they…

-

Assessing Innovation

(co-written with Matt Knight) Some background, for context Just over a month ago I got approached to ask if I could provide some advice on assessments to support phase two of the GovTech Catalyst (GTC) scheme. For those who aren’t aware of the GovTech Catalyst Scheme, there’s a blog here that explains how the scheme…