Category: Leadership

-

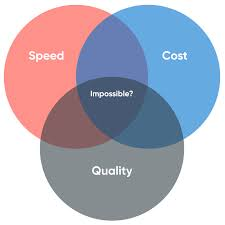

Prioritisation

How do we get Senior Leaders onboard? For the last 8 months I’ve been busy running Product Management training for various Public Sector clients; and one topic cohorts are always keen to focus on and discuss is prioritisation. Quite often there are multiple areas of prioritisation people are keen to understand further; firstly, how can…

-

Ada Lovelace Day 2023 – Resilience

To celebrate #AdaLovelaceDay this year, I was lucky enough to get to spend the day with the wonderful folks at @DigitalHer (part of Manchester Digital). In the morning the ran an Inspiration Breakfast event for women working within Tech with some lightening talks and break out sessions. Then in the afternoon they ran an Inspiration…

-

Onboarding – It’s an experiance

Every time we start a role at a new company, we go through Onboarding; and for every company it’s different; so what are the things we need to be considering as we try to perfect the onboarding experience, especially in this more virtual/remote/hybrid working world. Having just gone through this process again with Deloitte; I…

-

Onwards and Upwards

Today I say goodbye to my work with Kainos, and begin my new role as a Director at Deloitte Digital. I’ve really enjoyed the last 18 months with Kainos; and the opportunity it gave me to step back and focus on delivering ”A thing” after I was left feeling rather burnt out and exhausted by…

-

How do we make legacy transformation cool again?

Guest blog first published in #TechUk’s Public Sector week here on the 24th of June 2022. Legacy Transformation is one of those phrases; you hear it and just… sigh. It conjures up images of creaking tech stacks and migration plans that are more complex and lasting longer than your last relationship. Within the Public Sector,…

-

Maximising the Lean Agility approach in the Public Sector

First published on the 26th June 2022 as part of #TechUk’s Public Sector week here ; co-authored by Matt Thomas. We are living in a time of change, characterised by uncertainty. Adapting quickly has never been more important than today, and for organisations, this often means embracing and fully leveraging the potential of digital tools.…

-

The managers guide to understanding ADHD

(and why it’s often misunderstood for CIS women in particular) Let’s talk about ADHD We’ve all seen characters with ADHD on TV and in books etc, try and think of a few examples and I bet they all fit in one stereotypical box; “the naughty young white boy acting out in class”. But not only…

-

Neurodiverse parenting

One thing I’ve noticed, since I started blogging and talking more openly about being Neurodiverse myself, is how many people have reached out to me virtually or in real life to chat about how they as parents support their children who are (or might be) neurodiverse. I’ve spoken publicly many times (especially on twitter) about…

-

Looking for the positives

We’re all skilled in many different ways; when it comes to our careers; why do we apologies for our weaknesses, rather than celebrate our strengths? Another slightly introspective blog from me today, but one I think worth writing, as I know I’m not the only one guilty of this. As we move through our careers,…

-

Making User Centred Design more inclusive

How do we support people from minority or disadvantaged backgrounds to get a career in User Centred Design? If you look around for ways to get a careers in Digital/Tech, you would probably trip over half a dozen Apprenticeships, Academies or Earn as you Learn Schemes; not to mention Graduate Schemes; without even trying. However,…