Tag: GDS

-

Assessing Innovation

(co-written with Matt Knight) Some background, for context Just over a month ago I got approached to ask if I could provide some advice on assessments to support phase two of the GovTech Catalyst (GTC) scheme. For those who aren’t aware of the GovTech Catalyst Scheme, there’s a blog here that explains how the scheme…

-

Agile Delivery in a Waterfall procurement world

One of the things that has really become apparent when moving ‘supplier side’ is how much the procurement processes used by the public sector to tender work doesn’t facilitate agile delivery. The process of bidding for work, certainly as an SME is an industry in itself. This month alone we’ve seen multiple Invitations to Tender’s…

-

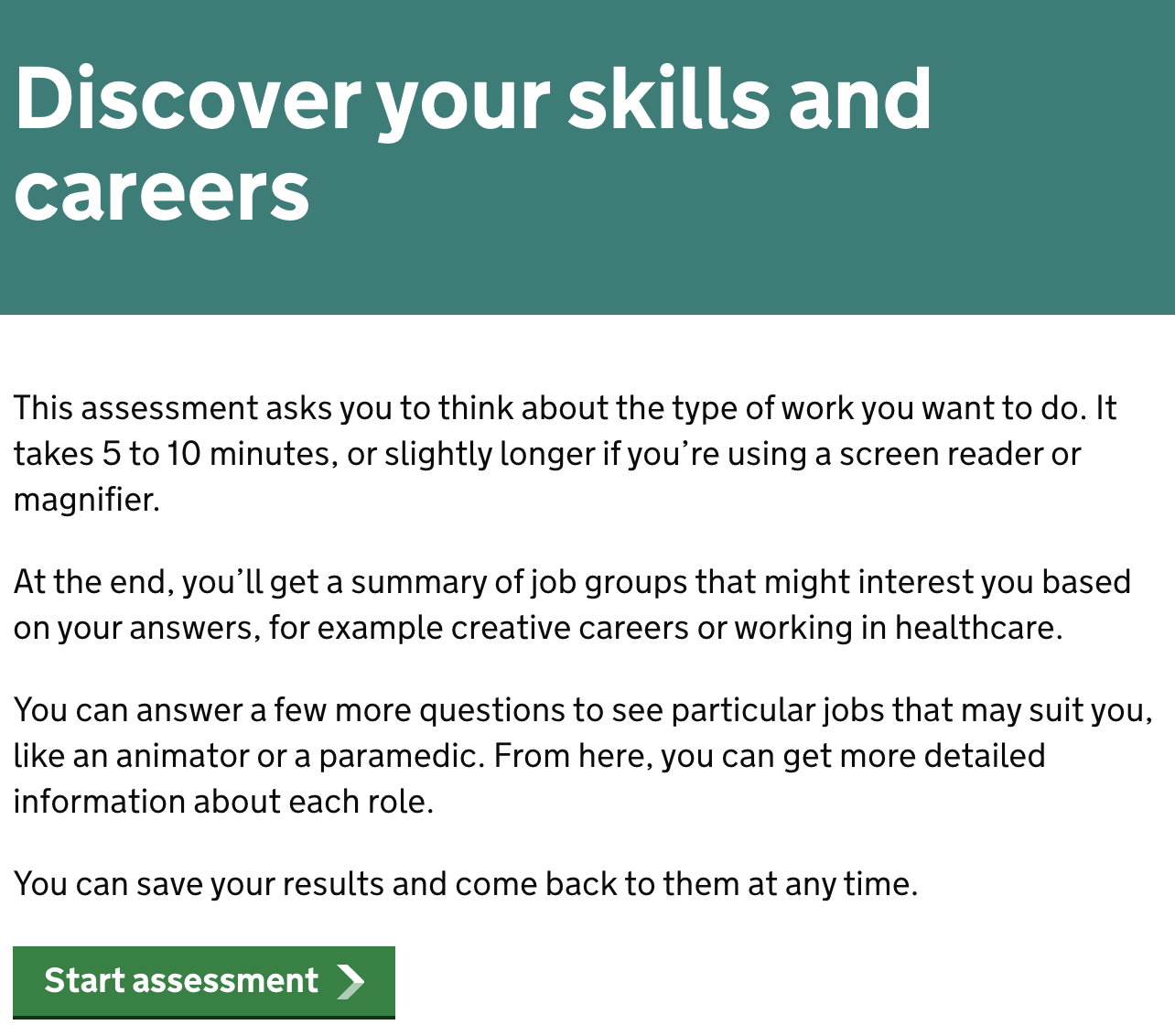

Notes from some Digital Service Standard Assessors on the Beta Assessment

The Beta Assessment is probably the one I get the most questions about; Primarily, “when do we actually go for our Beta Assessment and what does it involve?” Firstly what is an Assessment? Why do we assess products and services? If you’ve never been to a Digital Service Standard Assessment it can be daunting; so…

-

Round and round we go.

In other words Agile isn’t linear so stop making it look like it is. Most people within the public sector who work in Digital transformation have seen the GDS version of the Alpha lifecycle: Which aims to demonstrate that developing services starts with user needs, and that projects will move from Discovery to Live, with…

-

Speak Agile To Me:

I have blogged about some of these elsewhere, but a quick glossary of terms that you might hear when talkingAgile or Digital Transformation.